OpenAI’s latest flagship release, GPT-5, was recently released to ChatGPT users with many new capabilities focused on healthcare applications.

Notably, the launch of GPT-5 followed a White House event where health leaders, including OpenAI, committed to building a patient-centric and interoperable health data ecosystem. Together, these two developments may shape how people search, decide, and document in healthcare. But how might the growing use of AI affect the way patients typically seek out health information or how clinicians manage care?

What’s New in GPT-5 (and Why It Matters for Healthcare)

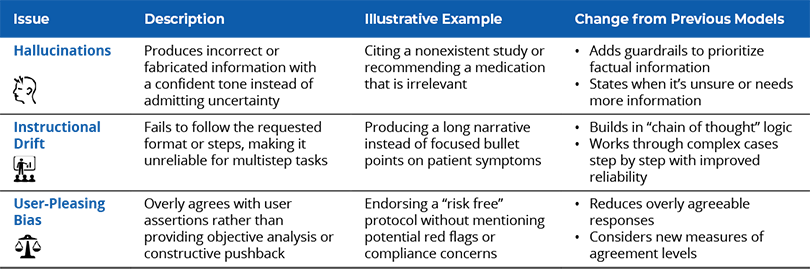

GPT-5 was announced as a “legitimate PhD-level expert” with state-of-the-art performance across health, visual perception, writing, math, coding, and more. The launch event also described targeted fixes for issues affecting earlier AI models.

These improvements include minimizing hallucinations, reducing instructional drift (failure to follow instructions), and balancing user-pleasing bias (sycophancy), making them especially important for healthcare applications.

In short, GPT-5’s core upgrades aim to deliver accurate information, closer adherence to user requests, and a more objective tone. These are precisely the traits that make an assistant safer to consult with health questions.

The Patient Perspective: Clearer Answers, Real Risks

Patients will naturally use GPT-5 to decode test results, compare treatment options, or draft questions for upcoming visits. Since GPT-5 is the new default in ChatGPT’s free tier, this type of use will become even more widespread. To be clear, OpenAI frames GPT-5 as a partner to help patients understand results and ask better questions and not as a replacement for a clinician.

- The upside is that a clearer explanation can reduce anxiety and improve shared decision-making. At the launch event, an oncology patient described how ChatGPT helped them interpret a biopsy showing advanced carcinoma and think through radiation options when their physicians disagreed on the course of treatment.

- However, risks remain. A recent peer-reviewed case report described a patient who developed bromide poisoning after taking AI advice to swap table salt for sodium bromide, leading to hospitalization and psychosis.1

Even with a safer model, misinterpretation and overreliance are real dangers when patients act on answers without clinical oversight.

Ideally, patients could use GPT-5 to become better informed about their care and foster more productive dialogue with clinicians. For example, they could look up key questions to ask before an appointment with their primary care provider or understand complex medication plans after a consultation with a specialist. When used this way, GPT-5 can narrow the information asymmetry that often leaves patients feeling confused or uncertain when navigating the healthcare system.

The goal is to strike a responsible balance—using large language models (LLMs) as a starting point to guide understanding and provide education, without substituting trusted medical judgments.

The Provider Perspective: Accountability and Trust

For clinicians, LLMs and AI in general can be a double-edged sword.

They promise to streamline certain tasks and improve patient interactions. For example, ambient listening can be used to capture conversations with patients, prepare visit summary notes, and queue orders in the chart. Certain EHR systems are already exploring GPT-like assistants to help write chart notes or answer patient messages.

However, the challenges and risks for clinicians are very real.

- They must still validate AI-generated content—a growing responsibility that can feel like yet another task in an already heavy workload.

- If patients bring printouts of a ChatGPT conversation into the clinic (an increasingly plausible scenario), clinicians might need to spend time addressing misconceptions or confirming what the AI got right.

“Arbitrating” the information from an anonymous AI model is a new kind of burden. Clinicians will need to build trust with patients around these tools, perhaps by acknowledging the usefulness of LLMs like GPT-5 while also addressing potential inaccuracies that patients might not have considered in their prompts.

As Microsoft’s health platform CTO Harjinder Sandhu cautioned, an AI might “make up information about [a] patient or omit important information,” and if clinicians aren’t vigilant, such errors “can lead to catastrophic consequences for that patient.”

The Bigger Picture

The White House and the Centers for Medicare & Medicaid Services (CMS) met with tech and healthcare leaders, including OpenAI, on July 30, 2025, to discuss how to build a more patient-focused digital health ecosystem. The primary objective of this announcement was to focus on stronger data interoperability and improve secure data sharing of patient information while reducing administrative burden.

One key idea from the announcement was to create “smart health records” that use an AI assistant. In this model, LLMs like GPT-5 could access patient data to provide useful, timely information for both patients and clinicians. For example, a patient could ask the AI questions about a recent MRI. Or a clinician could have an EHR draft a referral letter using details from the patient’s chart.

But how can privacy and security be maintained when an AI has access to so much sensitive information? The healthcare industry already struggles to get different platforms and systems to communicate with each other seamlessly. Introducing AI into the mix creates opportunities and complexity that calls for new standards and careful navigation.

What Can Healthcare Leaders Do Next?

Organizations at the forefront of applying AI have launched pilots, created governance guardrails, and trained their workforces to integrate AI into their workflows. This approach emphasizes prudent and governed experimentation and can help ensure that AI is introduced responsibly into the healthcare system.

A phased approach may include:

For now, it’s expected that health organizations will proceed with caution, starting with low-risk tasks before relying on AI for more critical diagnostic or treatment decisions.

Looking to unlock the potential of AI at your organization?

ECG has developed the Cipher Collective, a strategic AI partner network that drives scalable transformation for health systems. Through this network, organizations can design and implement relevant AI solutions using industry best practice methodologies.

1 Audrey Eichenberger, Stephen Thielke, and Adam Van Buskirk, “A Case of Bromism Influenced by Use of Artificial Intelligence.” Annals of Internal Medicine: Clinical Cases 4.8 (2025): e241260.

2 Kumar, Anivarya, et al., “A cross-sectional study of GPT-4–based plain language translation of clinical notes to improve patient comprehension of disease course and management.” Nejm Ai 2.2 (2025): AIoa2400402.